Blog Articles

Wednesday, July 1st, 2015

written by Todd Johnson, PhD

Informatics is the science of information. Informaticians identify, define, and solve information problems. Determining whether one drug is better than another drug at treating a certain type of breast cancer is a clinical research question. Developing a method to automatically identify possible drugs for treating breast cancer from the published literature is an information problem.

Informatics is the science of information. Informaticians identify, define, and solve information problems. Determining whether one drug is better than another drug at treating a certain type of breast cancer is a clinical research question. Developing a method to automatically identify possible drugs for treating breast cancer from the published literature is an information problem.

Another example of an information problem is to automatically create meaningful patient overviews from a patient’s electronic medical record. What information constitutes a good overview? How does “good” depend on the goal of the person looking at the overview? How can you extract meaningful information from the free text in the chart? How can you best present the information so that a person can quickly grasp it?

Suppose you create and test a mobile application to help diabetic patients track meals, exercise, insulin, glucose, and HBA1C, then study whether it improves glucose control. This is not an informatics problem or solution, because it does not identify or solve an information problem. Just because it uses a mobile application (IT) to track data, does not make it an informatics solution–informatics is neither computer science nor IT, though it often uses both as tools. What would be a good informatics problem in this area? One informatics approach is to study the kinds of actions a diabetic patient needs to take and the kinds of information they need to determine which action to take, then create a visualization that allows them to quickly “see” what they need to do.

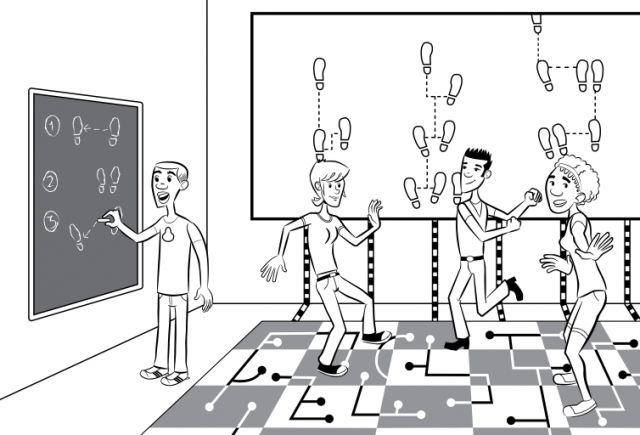

So what do you need to be an informatician? At a minimum, a set of skills called computational thinking, or CT for short. Google nicely breaks CT into four fundamental subskills:

Although CT, including algorithm design, is not programming or math, at present the best way to learn and demonstrate CT skills is by learning to develop and program algorithms and create computational and mathematical models.

There are a number of ways you can get these skills. For computational thinking I recommend:

The Udacity classes are very good because of the interactive in-video code editor with real-time feedback. You should be able to sign up for free or low cost versions of these classes.

A good place to start for mathematical models are with these:

If you only have time for a couple take Intro to CS and Model Thinking first. But keep learning. These are essential skills for informaticians and as an informatician you will ALWAYS need to learn more–especially technical skills. The more technical skills you have, the more employable you are.

Some people may tell you that many top informaticians never program or develop mathematical models. That’s true, but look at what they do and you will see all of the elements of CT. Learning to program and apply mathematical models is just a path to developing your CT skills.

Too often biomedical informatics programs shy away from teaching or requiring technical skills, because they worry that students from diverse, non-technical backgrounds will not be able to learn the skills. This is nonsense. First, CT skills are absolutely necessary for doing informatics. Second, like any set of skills CT skills can be taught and learned. So if you are looking for an informatics program, be sure to choose one that teaches or requires these skills. If you are in a program that does not, make sure you get them for yourself. You simply cannot be an informatician without them.

Todd R. Johnson, PhD, is a professor of biomedical informatics at UTHealth School of Biomedical Informatics (SBMI). Johnson’s efforts at SBMI are focused on the application of informatics in clinical settings, including quality and safety dashboards, visual analytics, clinical research informatics and big data for health care. His research uses cognitive science, computer science and human factors engineering to solve biomedical informatics problems.